Photo from Unsplash

Photo from Unsplash

Originally Posted On: https://www.zaptiva.com/blog/intelligence-utility-40x-hyperdeflation-shift/

Executive Summary

The global economy appears to face a structural transformation unprecedented in its velocity and magnitude. Intelligence, once a scarce, high-cost asset commanding premium compensation, may be transitioning toward near-zero marginal cost as a digital utility. This phenomenon, which researchers term the “Hyperdeflationary Shift,” could represent far more than incremental technological progress; it may constitute a fundamental reconfiguration of economic value creation.

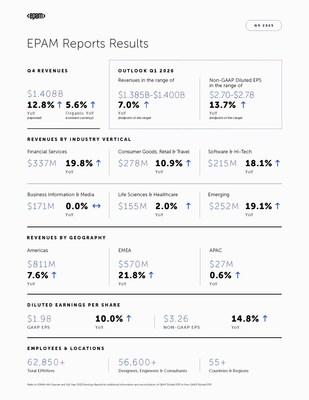

Research from Stanford’s Institute for Human-Centered AI documents that inference costs for AI systems matching GPT-3.5 performance plummeted over 280-fold between November 2022 and October 2024, with median annual deflation rates reaching 40-50x across multiple performance benchmarks [1]. When juxtaposed against historical technological adoptions (electricity required decades to achieve ubiquity), the rapidity of this transformation challenges conventional economic frameworks and strategic planning horizons.

Traditional macroeconomic indicators, particularly Gross Domestic Product, exhibit increasing inadequacy in capturing value creation within this emerging paradigm. As efficiency gains reduce transaction volumes and computational costs approach zero, activities that dramatically enhance human welfare may paradoxically register as economic contractions within legacy measurement systems. This measurement crisis underscores a broader observation: the competitive landscape appears to be experiencing tectonic shifts from intelligence generation toward intelligence orchestration, from the cognitive labor of “thinking” to the infrastructural challenge of effectively deploying abundant computational resources within existing systems of record.

5 Key Takeaways

- The 40x Annual Deflation Benchmark: AI inference costs demonstrate year-over-year reductions of 40-50x on median, with variance from 9x to 900x depending on task complexity and performance thresholds. This deflation rate dwarfs historical technological cost curves, including electrification [1, 2].

- Professional Hyperdeflation Across Knowledge Sectors: McKinsey’s November 2025 research establishes that 57% of U.S. work hours face potential automation with currently demonstrated technologies, disproportionately impacting white-collar cognitive functions previously insulated from technological displacement [3, 4].

- The Wait Equation Strategic Paradox: Accelerating AI capabilities creates rational economic incentives to defer complex projects. If superior solutions emerge within 12-18 months at dramatically reduced cost, immediate investment becomes economically suboptimal, potentially inducing temporary innovation paralysis [5].

- The GDP Measurement Paradox: Hyperefficiency appears as contraction within transaction-based GDP frameworks. When AI-driven solutions eliminate decades of recurring expenditure (chronic disease management, redundant professional services), traditional economic indicators register welfare improvements as output declines [6].

- Value Migration Toward Orchestration: As intelligence becomes a commoditized utility, competitive advantage migrates from computational capacity toward integration infrastructure. The orchestration layer enables AI systems to interact productively with enterprise architectures, legacy systems, and operational workflows [7].

The End of Cognitive Scarcity

Consider an economic reality where PhD-level research, global supply chain architecture, or million-line codebases cost less than artisanal coffee. This transformation unfolds not across decades but within annual cycles. While electrification required roughly five decades to achieve widespread industrial integration, the Intelligence Utility scales in 12-to-18-month iterations, compressing what were once generational transitions into rapid succession.

This velocity creates fundamental dissonance with existing economic structures. Global commerce, professional compensation, educational systems, and capital allocation mechanisms evolved predicated on cognitive scarcity: human intellectual capacity as a bottleneck resource. Pricing models, service valuations, and labor markets reflect the expensive temporality required for human expertise. As intelligence transitions toward abundant utility, these foundational assumptions crumble, rendering traditional economic frameworks increasingly obsolete.

Recent analysis from Epoch AI reveals that achieving specific AI performance benchmarks (such as PhD-level science question performance) has resulted in 40x annual cost reductions [1]. The Stanford AI Index 2025 corroborates this trajectory, documenting that inference costs for GPT-3.5-equivalent systems dropped from $20 per million tokens in November 2022 to $0.07 by October 2024, representing a 285-fold reduction within approximately 18 months [2].

The thesis emerging from this hyperdeflation suggests a significant trend: market value accruing to intelligence generation may be diminishing. The new economic frontier appears to reside in orchestration: the infrastructure, integration, and operational deployment of abundant computational resources within functional business contexts.

The 40x Curve: Intelligence Approaching Zero Marginal Cost

The propulsion mechanism driving this transformation manifests as 40-50x year-over-year hyperdeflation in AI inference costs across median performance benchmarks, with observed ranges from 9x to 900x depending on task complexity and model architecture [1]. This pace transcends conventional efficiency gains, representing structural price collapse for digital cognitive work.

The 40x Hyperdeflationary Curve: AI Inference Cost Collapse (2022-2026)

*Note: This visualization represents approximate cost trajectories synthesized from multiple industry research sources. Actual costs vary by model type, provider, and task complexity. The depicted trend illustrates the general magnitude of cost reduction observed across AI inference benchmarks, not exact pricing for specific implementations. Historical patterns suggest variability in deflation rates across different performance thresholds. Data synthesized from: Stanford HAI AI Index 2025, Epoch AI Research, NVIDIA Inference Economics Reports.

Contemporary AI systems execute complex cognitive tasks at approximately 1% of human professional costs while operating at 11x velocity [8]. Hardware improvements contribute 30% annual cost reductions, while energy efficiency gains add 40% annual improvements [2]. Algorithmic advances compound these trends, creating multilayered deflationary pressure across the technology stack.

Research published in November 2025 analyzing inference economics notes that AI capabilities advance so rapidly that quoted compute margins (while appearing robust at 70%) mask the velocity at which entire cost structures collapse [9]. As one analysis articulated, AI inference advances toward being effectively “too cheap to meter,” asymptotically approaching zero marginal cost [10].

When thinking about components of products that carry minimal market price, enterprises monetizing expertise may confront significant challenges. Intelligence could cease functioning as a traditional line-item expense or billable service; it may transform into a background utility, analogous to atmospheric oxygen or telecommunications infrastructure. In this potential landscape, the act of billing for thinking may cost more than the intelligence itself, potentially disrupting professional service economics.

Professional Hyperdeflation: Why Knowledge Work Faces Structural Hollowing

Cost collapse appears to trigger “Professional Hyperdeflation,” potentially hollowing prestigious labor market sectors that previously commanded premium compensation [11]. If AI systems perform PhD-level research or sophisticated software engineering at fractions of cents per query, the scarcity premium sustaining six-figure salaries could diminish significantly over time.

Current benchmarks prove striking: McKinsey’s November 2025 report establishes that 57% of U.S. work hours become automatable using currently demonstrated technologies (not speculative future capabilities, but systems deployable today) [3]. This represents nearly double McKinsey’s 2023 projection of 30% automation potential by 2030, with the timeline shifting from future possibility to present reality within 24 months [4].

The distributional impact skews heavily toward white-collar cognitive work. Analysis projects that nearly 30% of affected positions concentrate among white-collar workers (exceeding 300 million people globally), compared to 10% among blue-collar segments [12]. Knowledge labor no longer drives value creation because it no longer constitutes a scarce resource. The social contract linking education to income security dissolves as we transition from scarcity-based labor economics toward abundance-based utility frameworks.

Financial services, legal research, administrative coordination, content generation, and analytical functions face particularly acute pressure. Companies including IBM, Accenture, and Deloitte have publicly acknowledged pausing entry-level hiring programs, eliminating tens of thousands of roles, and explicitly stating that AI now performs functions previously requiring human analysts faster and more cost-effectively [13]. McKinsey’s December 2025 workforce reductions (carrying particular symbolic weight given the firm’s cultural positioning within white-collar professionalism) signal broader structural transitions as corporations pivot toward productivity harvesting through computational substitution [14].

The architectural transformation involves not wholesale job elimination but rather fundamental reconfiguration of human roles within production systems. As automation potential reaches 60-70% of working time in certain professional occupations, remaining human functions concentrate on oversight, verification, strategic judgment, and contexts requiring social-emotional intelligence: domains where computational systems currently demonstrate limitations [3, 15].

The Wait Equation: When Advancement Creates Rational Paralysis

AI advancement velocity generates perverse strategic incentive: The Wait Equation. In hyperdeflationary environments, initiating complex, resource-intensive projects today frequently proves economically irrational.

Consider pharmaceutical research requiring six months of expensive human R&D currently, but next-generation AI systems will likely solve equivalently “instantly and essentially free” within twelve months. The rational strategic response becomes deferral. This logic extends across drug discovery, enterprise software development, materials science, and complex systems engineering [5].

This creates what might be termed the “Productivity Paradox of Hyperadvancement.” While AI dramatically expands capability frontiers, the Wait Equation may induce a temporary deflationary spiral in innovation investment. Why allocate millions toward solutions today when they manifest as free utility tomorrow? This psychological and economic calculus risks paralyzing entire industry segments as stakeholders await the “free” future, potentially decelerating the very technological progress enabling the transformation [16].

The phenomenon manifests in corporate capital expenditure patterns. Organizations demonstrate hesitancy toward large-scale system implementations, perpetual software licenses, or multi-year development projects when obsolescence timelines compress to quarters rather than years. This rational caution, aggregated across enterprises, creates measurable impacts on business investment growth rates and innovation velocity metrics.

Strategic navigation requires distinguishing between tasks where waiting proves optimal versus domains where first-mover advantages, proprietary data acquisition, or organizational learning curves justify immediate deployment despite ongoing technological advancement. The framework demands perpetual recalculation as improvement trajectories evolve.

The GDP Paradox: When Efficiency Appears as Economic Contraction

We inhabit the epoch of the “GDP Paradox”: breakthrough improvements in human welfare register as economic contractions within traditional measurement frameworks [6]. GDP quantifies transaction costs; hyperdeflation eliminates costs; therefore, extreme efficiency manifests statistically as recession despite welfare improvements.

Consider AI-driven development of single-dose curative therapies replacing decades of chronic disease management encompassing hospital stays, recurring pharmaceutical purchases, and specialized medical services. Human health outcomes soar dramatically, yet GDP contracts because spending associated with problem management vanishes [6, 17].

Economic Value Migration – Labor to Capital-Mediated Economy (2022-2030)

- Human Knowledge Labor: Traditional cognitive work (research, analysis, strategy, content creation)

- Compute Infrastructure Ownership: Cloud providers, data centers, GPU/chip manufacturers

- Orchestration & Integration: Platforms connecting AI systems to enterprise infrastructure

*Modeling Disclaimer: This diagram represents a conceptual framework for understanding potential value redistribution patterns based on current automation research and economic analysis. The percentages shown are illustrative projections synthesized from multiple sources, not empirical measurements. Actual value distribution will vary significantly by industry, geography, and regulatory environment. These scenarios should be interpreted as directional indicators of structural economic shifts rather than precise forecasts. Framework synthesized from: McKinsey Future of Work Reports, Goldman Sachs AI Research, Capital Economics Analysis.

This paradox marks a definitive transition from labor-mediated economies (where value is distributed via wages for time and effort) toward capital-mediated systems where wealth accrues predominantly to capital asset ownership: computational infrastructure, foundational models, and orchestration platforms [18, 19]. Traditional employment-based value distribution mechanisms become insufficient as primary wealth generation shifts toward ownership of productive capital rather than contribution of labor.

Analysis from late 2025 demonstrates that AI-related capital expenditure now accounts for approximately half of U.S. GDP growth in recent quarters, with business investment in software, equipment, and data centers contributing roughly one percentage point to GDP expansion [20, 21]. This structural dependence creates fragility. If AI investment merely plateaus rather than continues exponential growth, it could remove 0.5 percentage points from growth rates; combined with potential market corrections reducing wealth effects, this could eliminate 1-1.5 percentage points annually [20].

We require abandoning GDP in favor of alternative metrics capturing “Utility Abundance”: frameworks measuring welfare improvements, capability enhancements, and quality-of-life advances rather than transaction volumes. Otherwise, our measurement systems indicate failure precisely when we solve humanity’s most pressing challenges. The economic accounting revolution must parallel the technological one, or our analytical frameworks will systematically mischaracterize progress as decline.

From Generation to Orchestration: The New Competitive Frontier

If intelligence generation becomes a free commodity, where does value migrate? The answer crystallizes around “Agentic Automation”: the orchestration infrastructure enabling abundant, inexpensive computational intelligence to interact productively with enterprise systems of record, legacy architectures, and operational workflows.

In this transformed landscape, the “plumbing” (the integration layer connecting AI capabilities to functional business processes) becomes the critical value-capture mechanism. The “thinking” approaches zero cost, but the “doing” (the bespoke challenge of implementation within specific organizational contexts) remains complex, valuable, and defensible. This represents the strategic pivot for platforms like Zaptiva: functioning as an essential orchestration layer, transforming raw computational capacity into operational functionality.

Zaptiva’s positioning within this hyperdeflationary environment proves illustrative. As a low-code SaaS platform specializing in business process automation and ERP integration, Zaptiva addresses precisely the orchestration challenge emerging as a critical bottleneck [7]. The platform’s capabilities in connecting diverse data sources, executing complex transformations, and synchronizing results across enterprise applications (including QuickBooks, with roadmap expansion toward NetSuite, Sage Intacct, MS Dynamics, Xero, and Zoho) exemplify the infrastructure required to actualize AI abundance within operational realities.

Consider Zaptiva’s case study with Complete Business Group (CBG), which automated 100+ spreadsheets managing sales commissions, reducing a seven-day monthly process to under one day while improving data quality and reducing errors [7]. This transformation illustrates orchestration value: the intelligence required for commission calculations becomes computationally trivial, but connecting that intelligence to existing data sources, business rules, validation requirements, and downstream systems demands sophisticated integration infrastructure.

The architectural pattern generalizes: raw intelligence utility remains useless when siloed. The entity positioning as “Plumber” of the intelligence age (connecting autonomous capabilities to real-world operational systems) captures more value than the “Philosopher” generating insights. Intelligence constitutes the utility; orchestration represents the service rendering that makes the utility functional and monetizable.

Strategic implications prove profound. Competitive advantage migrates from proprietary algorithms or computational scale toward integration depth, system interoperability, workflow automation sophistication, and the ability to embed AI capabilities within existing operational contexts. Organizations commanding these orchestration capabilities (whether through proprietary platforms, strategic partnerships, or implementation expertise) position favorably within post-scarcity intelligence economics.

Navigating the Post-Labor Horizon

The transition from labor-mediated toward capital-and-compute-mediated economic systems constitutes the defining transformation of our century. We advance toward a horizon where primary scarcity shifts from “thinking” capacity toward the capability to deploy that thinking effectively within legacy structures, regulatory frameworks, and operational realities of contemporary global commerce.

As we navigate the 40x hyperdeflationary curve, the fundamental question emerges: In a world where PhD-level cognition may cost essentially nothing, what value proposition do you offer? According to current research trends, the answer appears to reside not in effort expenditure or knowledge generation (both increasingly commoditized) but in strategic ownership and operational mastery of the orchestration systems integrating abundant intelligence into functional, value-creating workflows.

The enterprises, platforms, and professionals who recognize this shift (who position themselves not as sellers of intelligence but as architects of orchestration) will capture disproportionate value within the post-scarcity cognitive economy. For organizations navigating this transformation, the imperative proves clear: invest in the infrastructure, expertise, and systems that bridge the gap between computational abundance and operational reality.

The hyperdeflationary shift is not approaching; according to multiple industry sources, it appears to be here, measurable in quarterly earnings, employment statistics, and rapidly evolving capability benchmarks. The strategic question is not whether to adapt, but how swiftly and comprehensively your organization can pivot toward the orchestration paradigm before competitive advantages within traditional frameworks potentially diminish.

Experience the Orchestration Advantage

The transformation from cognitive scarcity to computational abundance demands more than awareness; it requires an actionable implementation strategy. As intelligence becomes utility, your competitive positioning depends on how effectively you orchestrate that utility within your operational architecture.

Zaptiva provides the integration and automation infrastructure essential for navigating this hyperdeflationary landscape. Our low-code platform connects AI capabilities to your existing ERP, CRM, and business systems, transforming abstract computational power into tangible operational gains.

If you’re ready to position your organization advantageously within the post-scarcity intelligence economy, schedule a demo with our team. Discover how sophisticated orchestration infrastructure translates abundant intelligence into measurable business value, because in an era where thinking costs nothing, implementation architecture determines everything.

Key References

- Source [1]: Cottier, B., Snodin, B., Owen, D., & Adamczewski, T. (2025). “LLM inference prices have fallen rapidly but unequally across tasks.” Epoch AI. Retrieved from https://epoch.ai/data-insights/llm-inference-price-trends

- Source [2]: Stanford Institute for Human-Centered AI. (2025). “The AI Index 2025 Annual Report.” Stanford University. Retrieved from https://hai.stanford.edu/ai-index/2025-ai-index-report

- Source [3]: McKinsey Global Institute. (2025). “Agents, Robots, and Us: Skill Partnerships in the Age of AI.” Retrieved from https://www.mckinsey.com

- Source [4]: Fisher, A. (2025). “McKinsey’s November 2025 Bombshell: 57% of Work Hours Already Automatable.” Medium. Retrieved from https://medium.com/@angelaf_56127/mckinseys-november-2025-bombshell-57-of-work-hours-already-automatable-e57bd9b706f1

- Source [5]: Various sources discussing AI advancement timelines and strategic investment decision frameworks in technology adoption cycles, 2024-2025.

- Source [6]: Lango, L. (2025). “5 Major Economic Predictions for 2026.” InvestorPlace. Retrieved from https://investorplace.com/hypergrowthinvesting/2025/12/5-major-economic-predictions-for-2026/

- Source [7]: Zaptiva. (2026). “Integrate, Automate & Elevate Your Business.” Retrieved from https://www.zaptiva.com/

- Source [8]: Multiple industry analyses comparing AI task completion speeds and costs relative to human professional benchmarks, 2024-2025.

- Source [9]: Barclays, L. (2026). “Who Captures the Value When AI Inference Becomes Cheap?” Retrieved from https://lesbarclays.substack.com/p/who-captures-the-value-when-ai-inference

- Source [10]: Cerulean. (2025). “The Decreasing Cost of Intelligence.” Retrieved from https://www.joincerulean.com/blog/the-decreasing-cost-of-intelligence

- Source [11]: Various economic research on professional wage compression and automation impacts across knowledge sectors, 2024-2025.

- Source [12]: Unite.AI. (2025). “AI’s Great Role Reversal: White-Collar Jobs Hit 10x Harder.” Retrieved from https://www.unite.ai/ais-great-role-reversal-white-collar-jobs-hit-10x-harder-how-should-organisations-prepare/

- Source [13]: WhatJobs. (2025). “AI Job Disruption 2025: Entry-Level White Collar Roles Face ‘Bloodbath’.” Retrieved from https://www.whatjobs.com/news/ai-job-disruption-2025/

- Source [14]: Quartz. (2025). “McKinsey layoffs show white-collar job cuts are spreading.” Retrieved from https://qz.com/mckinsey-layoffs-white-collar-jobs-ai

- Source [15]: McKinsey & Company. (2017). “Jobs lost, jobs gained: What the future of work will mean for jobs, skills, and wages.” Retrieved from https://www.mckinsey.com/featured-insights/future-of-work/jobs-lost-jobs-gained-what-the-future-of-work-will-mean-for-jobs-skills-and-wages

- Source [16]: Various economic analyses of corporate capital expenditure patterns and innovation investment cycles during periods of rapid technological transition.

- Source [17]: Economic research on GDP measurement challenges in digital transformation contexts and welfare economics frameworks.

- Source [18]: Goldman Sachs Research and various capital economics analyses regarding wealth distribution shifts in AI-driven economies, 2025.

- Source [19]: Multiple sources on the transition from labor-intensive to capital-intensive value creation models in advanced economies.

- Source [20]: FinTech Magazine. (2025). “How AI’s Economic Weight Impacts Fintech’s Future.” Retrieved from https://fintechmagazine.com/news/has-the-us-economy-become-dependent-on-ai-investment

- Source [21]: St. Louis Federal Reserve. (2026). “Tracking AI’s Contribution to GDP Growth.” Retrieved from https://www.stlouisfed.org/on-the-economy/2026/jan/tracking-ai-contribution-gdp-growth